Processing:

Subscribe to the future of healthcare.

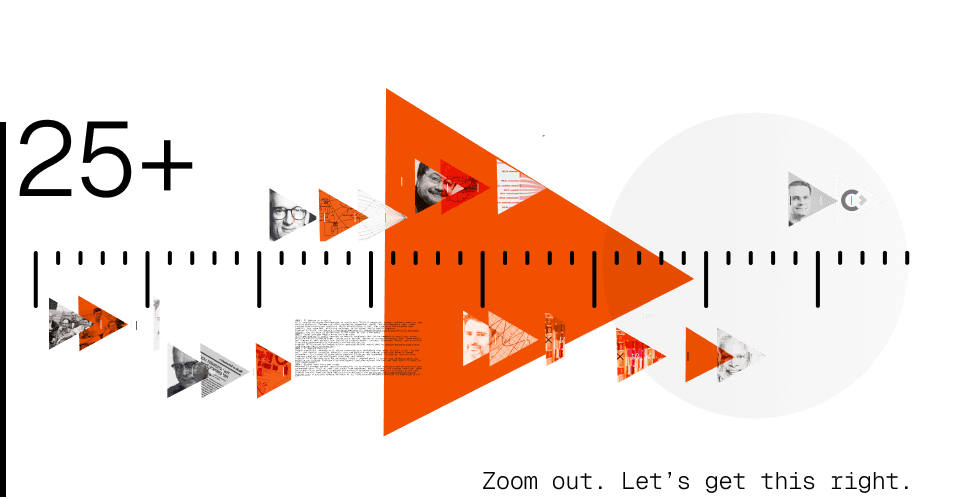

AC/P#001/Draft. A rewind: 75 years of autonomous computing in healthcare

Sent:

November 30, 2025

Welcome to Processing #001, a first draft of the first email of a newsletter by the Automate.clinic team.

It’s tempting to treat every technological advance like it just happened, like magic. Starting this new venture made us want to pause and try to chronicle AI in healthcare from the start. To look at the ingredients of that magic.

When we did, it became obvious that medical breakthroughs don’t come to life clean. They’re stacked on old systems, outmoded research, and ideas that died and still somehow held them up. That only gets more tangled when you look at the histories of both clinical advancements with digital technology. Their timelines didn’t just interflow—they collided, left pieces that shape our now, some clicked, others created systems that need reform, but, hopefully, their understanding can point us to what we need to build next.

We are confident that AI in healthcare will be difficult to implement without physicians in the design process who deeply understand not only the history of AI but how it can be best deployed to the patient’s bedside.

The 75 year AI/healthcare rewind:

From “thinking machines” to predictive molecular drug design.

We didn't set out to compile a complete history, just enough to get a grasp of the events that shaped the journey of autonomous computing in care, and in the process further our perspective. The below is meant to be a kind of anti-amnesiac to remember that while AI seems new, it has been developed alongside medicine for a long time.

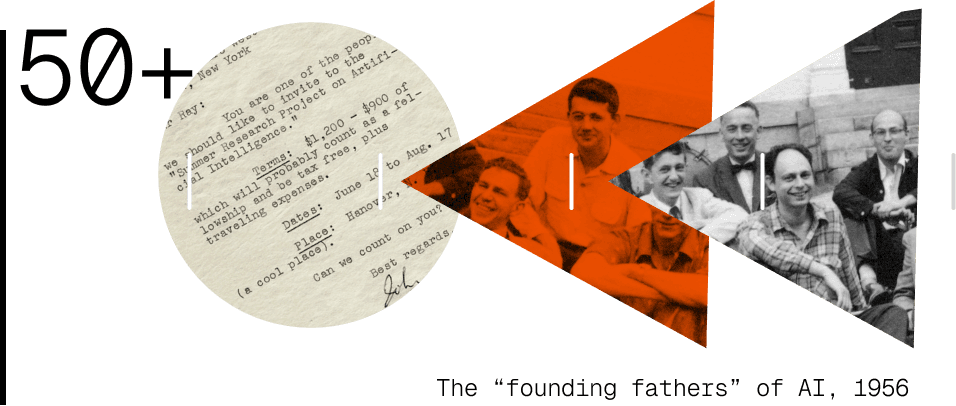

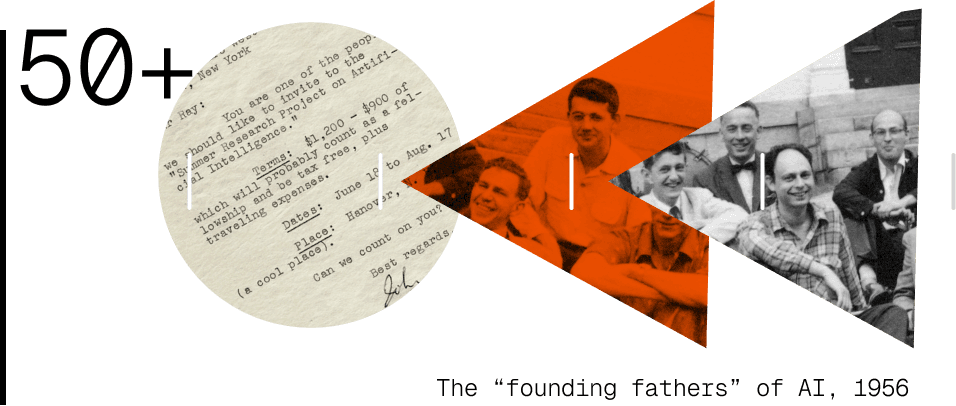

1950+ | Inviting A.I.

4 mathematicians, Claude Shannon, John McCarthy, Nathaniel Rochester, and Marvin Minsky, organize a weeks-long brainstorm about “thinking machines”. During planning they land on the term Artificial Intelligence as part of the title for the event that is joined by a handful of other researchers. This workshop is deemed instrumental in establishing AI as a distinct field of study, with one area for rich potential being staked on medical diagnostics. The event organizers are later considered as members of the founding fathers of AI—somehow, there were only fathers.

# aside: Then Assistant Professor of Mathematics, John McCarthy, is said to have chosen the neutral title AI because he was trying to avoid scrutiny by his peers. Among them, Norbert Wiener, said to be McCarthy’s role model, was a scientist and linguistics expert, and likely the first researcher to work on Esperanto, and surely tough to argue semantics with.

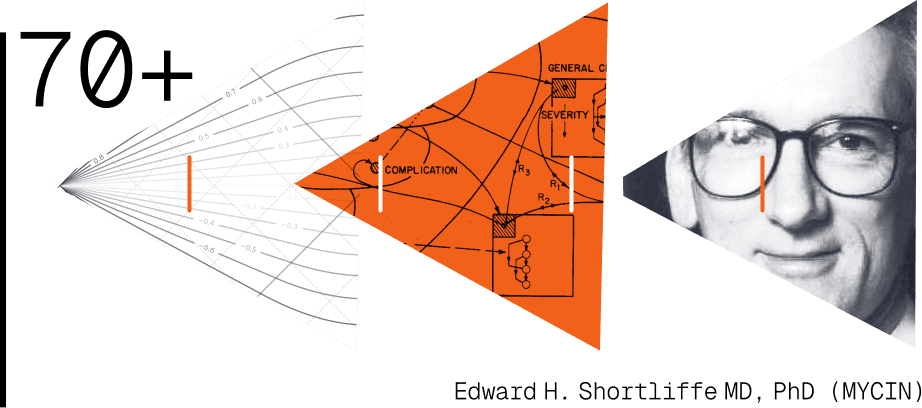

1970+ | Computer prescriptions become possible

Stanford researchers develop MYCIN, one of the first rule-based systems able to quiz doctors with a series of simple questions that it could match to ~600 rules in a clinical database to help diagnose infections and recommend antibiotics. With its diagnostics rating performing slightly better (a few percent, around 65% total acceptability) than the 5 faculty members in tests, and its ability to answer why, how, and why not something else when quizzed, it became clear that its new partitioning algorithm that ruled out entire diagnostics trees separated clinical fact-finding from more common, single-truth computing approaches.

# aside: MYCIN, the system’s name, was derived from the word suffix of many antibiotics.

1977 | Systems dialogue: initiated

Based on the early breakthroughs of SHRDLU (1968-1970) at MIT, a system able to handle natural language reasoning in a constrained domain, in 1977 ARPA funds a 5-year study called Speech Understanding Research or SUR. This speaking dialog system is developed with the ability to process spoken input in English and translate output to several European languages. While immediate applications were found in logistics, customer service, tech support, and education, progress also pointed the way for language models with enhanced conversational capabilities, including the medical field.

# aside: HARPY (one of the systems used) achieved 91% sentence accuracy using a vocabulary of 1000 words, which topped the expert’s program expectations.

1980s | Re: QMR Was: INTERNIST-1

Based on dialog system technology, INTERNIST-1 is developed at the University of Pittsburgh over 10 years to assist with internal medical diagnoses. INTERNIST-1, later known as Quick Medical Reference or QMR, stands apart because it does not adopt probabilistic models because its authors argue that medicine is more qualitative than quantitative. They go on to implement a rules-based differential diagnostic instead of using a Bayesian solution. This leads to custom logic for medical epistemology that can rule out entire decision trees, placing QMR in its own class of specialized solutions.

# aside: In not deploying Bayesian math, the QMR solution sidestepped decades of clinical resistance because of quantitative logic that would not let models easily reveal its reasoning. This is now often called “black box” AI.

1990+ | Bayesian networks resurface in clinical decision support

In a push and pull, probabilistic reasoning through Bayesian networks (BNs) gains popularity again for diagnosis and treatment planning. Its approach performs by leaps and bounds better in cardiac, cancer, and psychological conditions, but runs into issues in domains with less structured data or unclear causal pathways.

# aside: Bayesian networks struggle most with subjective and overlapping symptoms like in mental health diagnostics, and benefit most from clean, consistent data structures, that are often not easy to come by.

1995+ | Modeling cause, not just correlation

As the limitations of classic Bayesian networks became clearer, researchers like Judea Pearl (Causality: Models, Reasoning, and Inference) and Peter Lucas (work in Alzheimer’s detection) introduce formal causal graphs and do-calculus, which reduce complex interventional questions into observable probabilities, to better medical reasoning. While Pearl’s theory gains traction, use lags because healthcare rarely produces the kind of interventional data his models require; randomized, controlled, and counterfactual.

# aside: In Healthcare “just try run the experiment” often isn’t an option like it is in other industries. Ethical, logistical, and privacy limits require that causality has to be inferred, and not observed.

# aside 2: Judea Pearl explaining why he thinks his work discovered conscious AI, Youtube.

(Video production advisement: Dramatic.)

2000+ | Causal inference, applied

Following Judea Pearl’s theory, researchers begin applying do‑calculus and causal graphs to real‑world healthcare problems—especially in epidemiology and public health, where randomized trials are often impossible. These methods help estimate treatment effects and correct observational studies without relying solely on prediction. As published by the UCLA Cognitive Systems Laboratory, this marked a key shift from traditional machine learning: in medicine, it mattered whether something caused an outcome—not only if it was associated.

# aside: Causal inference gives medicine a way to ask “what if?” without experiments, but answers still depend on how much the data remembers.

2002+ | When health records turn infrastructure

Widespread adoption of EHR systems across hospitals and clinics transforms healthcare data from fragmented paper charts into machine-readable digital records. EHRs create the conditions for AI to emerge: large volumes of longitudinal, structured (and semi-structured) clinical data are now accessible. The problem is that these systems aren’t built with modeling in mind. Data is spotty across vendors, unstandardized across systems, and difficult to access for researchers.

# aside: Medical records date back as long as 1,600-3,000 BC as found by archaeologists in translations of Egyptian hieroglyphic papyri.

2011 | IBM Watson in Healthcare

After winning Jeopardy! (YouTube), IBM Watson technology was used in oncology to assist physicians make treatment decisions.

# aside: More than 10 years after Watson wins the quiz show, IBM sells off Watson Health after failing to turn its futuristic TV performance into clinical outcomes.

2015 | Deep learning upends imaging diagnostics

Deep learning algorithms start to outperform radiologists and dermatologists in detecting certain conditions from medical imaging—especially in fields like ophthalmology, dermatology, and oncology. These advances aren’t just about better models—they rely on software systems capable of ingesting, labeling, and scaling large volumes of annotated clinical images, often curated in partnership with specialists.

# aside: AI output looks like magic, but it's an amalgamation and the driver behind every query’s response is clinical data created and enriched by human physicians and experts.

2018 | AI gets cleared to diagnose, solo

The FDA approves IDx-DR, an autonomous diagnostic system for detecting diabetic retinopathy in retinal images—without a clinician involved. It’s the first time an AI is allowed to make a medical decision independently, marking a regulatory milestone that shifts AI from decision-support to maker.

# aside: For the first time a tool, IDx-DR, didn’t assist doctors; it did not even need them. This might be remembered as a milestone moment that has many physicians rethink their work / profession.

2020 | AI thrives in a crisis

AI is rapidly developed and deployed to assist with COVID-19 diagnosis, triage, treatment modeling, and vaccine discovery. Systems are often trained on fragmented, rapidly evolving datasets, many crowd-sourced from overwhelmed hospitals. While effectiveness varies, the urgency of the pandemic made powerful, but imperfect, solutions necessary in the global public health response.

# aside: In China, the startup InferVision deployed its pneumonia‑detecting algorithm to 34 hospitals (Wired), and was able to review over 32,000 cases in just a few weeks.

2022 | Large Language Models enter the exam room

GPT-4 and similar AI models demonstrate medical knowledge that can be compared to physicians across multiple-choice licensing exams. Unlike prior systems trained on structured medical data, these models are trained on text scraped from the public internet—books, journals, Wikipedia, Reddit, and so on—with surprising advancements on diagnostic and medical ability.

# aside: GPT-4 passed the boards without accruing medical school debt by copying everyone else’s work from the web (mozillafoundation).

2023 | AI designs medicine

Beyond simulation, generative AI starts producing drug candidates that enter clinical trials. Systems don’t just predict molecular behavior; they can generate new chemical structures based on target parameters, are trained on proprietary compound libraries and biomedical literature, and can often compress yearlong drug development timelines into months.

# aside: An AI-designed drug for pulmonary fibrosis reached phase II trials—just 18 months after the molecule was created. Previous drug development timelines without the tech may have taken 4-6 years to only reach phase I.

2025 | Systems make their own rounds

Advanced AI systems receive regulatory approval for autonomous patient monitoring and personalized care recommendations. Built on real-time inputs from wearables, health records, and symptom reporting, these assistants offer continuous oversight and proactive guidance—without needing a clinician in the loop.

# aside The U.S. Food and Drug Administration maintains the Artificial Intelligence‑Enabled Medical Devices list: a publicly updated database of all AI/ML‑powered devices authorized for marketing in the U.S.

From now on, every two weeks

These events may be on a timeline, but they are not sequential. There is no proverbial arrow between problem → solution, it’s a meandering of messes, their consequences, and people making the best decisions they could, with the tools and knowledge they had. Healthcare technology is not just different from other industries because of the higher stakes. It’s different because it’s trying to bridge structure and imbroglio; from the clinical, over the ethical, to the emotional. This needs all hands on deck to be understood, worked over, quantized if needed, to get the right output, with the right advice, at the right time. In widening the overlap of the Venn of healthcare and tech is opportunity.

We’ll keep processing this and more, and hope that you will follow along in your inbox every other week.

— FF / Automate.clinic team

As medical AI evolves, clinician expertise and real-world insights are crucial. Help shape the future of healthcare technology by highlighting overlooked milestones and ensuring AI solutions are clinically meaningful, ethical, and improve patient outcomes. Email us to join the conversation and contribute to the next chapter in medical AI. We’ll credit your contributions for our first newsletter if you like.

Processing:

Subscribe to the future of healthcare.

AC/P#001/Draft. A rewind: 75 years of autonomous computing in healthcare

Sent:

November 30, 2025

Welcome to Processing #001, a first draft of the first email of a newsletter by the Automate.clinic team.

It’s tempting to treat every technological advance like it just happened, like magic. Starting this new venture made us want to pause and try to chronicle AI in healthcare from the start. To look at the ingredients of that magic.

When we did, it became obvious that medical breakthroughs don’t come to life clean. They’re stacked on old systems, outmoded research, and ideas that died and still somehow held them up. That only gets more tangled when you look at the histories of both clinical advancements with digital technology. Their timelines didn’t just interflow—they collided, left pieces that shape our now, some clicked, others created systems that need reform, but, hopefully, their understanding can point us to what we need to build next.

We are confident that AI in healthcare will be difficult to implement without physicians in the design process who deeply understand not only the history of AI but how it can be best deployed to the patient’s bedside.

The 75 year AI/healthcare rewind:

From “thinking machines” to predictive molecular drug design.

We didn't set out to compile a complete history, just enough to get a grasp of the events that shaped the journey of autonomous computing in care, and in the process further our perspective. The below is meant to be a kind of anti-amnesiac to remember that while AI seems new, it has been developed alongside medicine for a long time.

1950+ | Inviting A.I.

4 mathematicians, Claude Shannon, John McCarthy, Nathaniel Rochester, and Marvin Minsky, organize a weeks-long brainstorm about “thinking machines”. During planning they land on the term Artificial Intelligence as part of the title for the event that is joined by a handful of other researchers. This workshop is deemed instrumental in establishing AI as a distinct field of study, with one area for rich potential being staked on medical diagnostics. The event organizers are later considered as members of the founding fathers of AI—somehow, there were only fathers.

# aside: Then Assistant Professor of Mathematics, John McCarthy, is said to have chosen the neutral title AI because he was trying to avoid scrutiny by his peers. Among them, Norbert Wiener, said to be McCarthy’s role model, was a scientist and linguistics expert, and likely the first researcher to work on Esperanto, and surely tough to argue semantics with.

1970+ | Computer prescriptions become possible

Stanford researchers develop MYCIN, one of the first rule-based systems able to quiz doctors with a series of simple questions that it could match to ~600 rules in a clinical database to help diagnose infections and recommend antibiotics. With its diagnostics rating performing slightly better (a few percent, around 65% total acceptability) than the 5 faculty members in tests, and its ability to answer why, how, and why not something else when quizzed, it became clear that its new partitioning algorithm that ruled out entire diagnostics trees separated clinical fact-finding from more common, single-truth computing approaches.

# aside: MYCIN, the system’s name, was derived from the word suffix of many antibiotics.

1977 | Systems dialogue: initiated

Based on the early breakthroughs of SHRDLU (1968-1970) at MIT, a system able to handle natural language reasoning in a constrained domain, in 1977 ARPA funds a 5-year study called Speech Understanding Research or SUR. This speaking dialog system is developed with the ability to process spoken input in English and translate output to several European languages. While immediate applications were found in logistics, customer service, tech support, and education, progress also pointed the way for language models with enhanced conversational capabilities, including the medical field.

# aside: HARPY (one of the systems used) achieved 91% sentence accuracy using a vocabulary of 1000 words, which topped the expert’s program expectations.

1980s | Re: QMR Was: INTERNIST-1

Based on dialog system technology, INTERNIST-1 is developed at the University of Pittsburgh over 10 years to assist with internal medical diagnoses. INTERNIST-1, later known as Quick Medical Reference or QMR, stands apart because it does not adopt probabilistic models because its authors argue that medicine is more qualitative than quantitative. They go on to implement a rules-based differential diagnostic instead of using a Bayesian solution. This leads to custom logic for medical epistemology that can rule out entire decision trees, placing QMR in its own class of specialized solutions.

# aside: In not deploying Bayesian math, the QMR solution sidestepped decades of clinical resistance because of quantitative logic that would not let models easily reveal its reasoning. This is now often called “black box” AI.

1990+ | Bayesian networks resurface in clinical decision support

In a push and pull, probabilistic reasoning through Bayesian networks (BNs) gains popularity again for diagnosis and treatment planning. Its approach performs by leaps and bounds better in cardiac, cancer, and psychological conditions, but runs into issues in domains with less structured data or unclear causal pathways.

# aside: Bayesian networks struggle most with subjective and overlapping symptoms like in mental health diagnostics, and benefit most from clean, consistent data structures, that are often not easy to come by.

1995+ | Modeling cause, not just correlation

As the limitations of classic Bayesian networks became clearer, researchers like Judea Pearl (Causality: Models, Reasoning, and Inference) and Peter Lucas (work in Alzheimer’s detection) introduce formal causal graphs and do-calculus, which reduce complex interventional questions into observable probabilities, to better medical reasoning. While Pearl’s theory gains traction, use lags because healthcare rarely produces the kind of interventional data his models require; randomized, controlled, and counterfactual.

# aside: In Healthcare “just try run the experiment” often isn’t an option like it is in other industries. Ethical, logistical, and privacy limits require that causality has to be inferred, and not observed.

# aside 2: Judea Pearl explaining why he thinks his work discovered conscious AI, Youtube.

(Video production advisement: Dramatic.)

2000+ | Causal inference, applied

Following Judea Pearl’s theory, researchers begin applying do‑calculus and causal graphs to real‑world healthcare problems—especially in epidemiology and public health, where randomized trials are often impossible. These methods help estimate treatment effects and correct observational studies without relying solely on prediction. As published by the UCLA Cognitive Systems Laboratory, this marked a key shift from traditional machine learning: in medicine, it mattered whether something caused an outcome—not only if it was associated.

# aside: Causal inference gives medicine a way to ask “what if?” without experiments, but answers still depend on how much the data remembers.

2002+ | When health records turn infrastructure

Widespread adoption of EHR systems across hospitals and clinics transforms healthcare data from fragmented paper charts into machine-readable digital records. EHRs create the conditions for AI to emerge: large volumes of longitudinal, structured (and semi-structured) clinical data are now accessible. The problem is that these systems aren’t built with modeling in mind. Data is spotty across vendors, unstandardized across systems, and difficult to access for researchers.

# aside: Medical records date back as long as 1,600-3,000 BC as found by archaeologists in translations of Egyptian hieroglyphic papyri.

2011 | IBM Watson in Healthcare

After winning Jeopardy! (YouTube), IBM Watson technology was used in oncology to assist physicians make treatment decisions.

# aside: More than 10 years after Watson wins the quiz show, IBM sells off Watson Health after failing to turn its futuristic TV performance into clinical outcomes.

2015 | Deep learning upends imaging diagnostics

Deep learning algorithms start to outperform radiologists and dermatologists in detecting certain conditions from medical imaging—especially in fields like ophthalmology, dermatology, and oncology. These advances aren’t just about better models—they rely on software systems capable of ingesting, labeling, and scaling large volumes of annotated clinical images, often curated in partnership with specialists.

# aside: AI output looks like magic, but it's an amalgamation and the driver behind every query’s response is clinical data created and enriched by human physicians and experts.

2018 | AI gets cleared to diagnose, solo

The FDA approves IDx-DR, an autonomous diagnostic system for detecting diabetic retinopathy in retinal images—without a clinician involved. It’s the first time an AI is allowed to make a medical decision independently, marking a regulatory milestone that shifts AI from decision-support to maker.

# aside: For the first time a tool, IDx-DR, didn’t assist doctors; it did not even need them. This might be remembered as a milestone moment that has many physicians rethink their work / profession.

2020 | AI thrives in a crisis

AI is rapidly developed and deployed to assist with COVID-19 diagnosis, triage, treatment modeling, and vaccine discovery. Systems are often trained on fragmented, rapidly evolving datasets, many crowd-sourced from overwhelmed hospitals. While effectiveness varies, the urgency of the pandemic made powerful, but imperfect, solutions necessary in the global public health response.

# aside: In China, the startup InferVision deployed its pneumonia‑detecting algorithm to 34 hospitals (Wired), and was able to review over 32,000 cases in just a few weeks.

2022 | Large Language Models enter the exam room

GPT-4 and similar AI models demonstrate medical knowledge that can be compared to physicians across multiple-choice licensing exams. Unlike prior systems trained on structured medical data, these models are trained on text scraped from the public internet—books, journals, Wikipedia, Reddit, and so on—with surprising advancements on diagnostic and medical ability.

# aside: GPT-4 passed the boards without accruing medical school debt by copying everyone else’s work from the web (mozillafoundation).

2023 | AI designs medicine

Beyond simulation, generative AI starts producing drug candidates that enter clinical trials. Systems don’t just predict molecular behavior; they can generate new chemical structures based on target parameters, are trained on proprietary compound libraries and biomedical literature, and can often compress yearlong drug development timelines into months.

# aside: An AI-designed drug for pulmonary fibrosis reached phase II trials—just 18 months after the molecule was created. Previous drug development timelines without the tech may have taken 4-6 years to only reach phase I.

2025 | Systems make their own rounds

Advanced AI systems receive regulatory approval for autonomous patient monitoring and personalized care recommendations. Built on real-time inputs from wearables, health records, and symptom reporting, these assistants offer continuous oversight and proactive guidance—without needing a clinician in the loop.

# aside The U.S. Food and Drug Administration maintains the Artificial Intelligence‑Enabled Medical Devices list: a publicly updated database of all AI/ML‑powered devices authorized for marketing in the U.S.

From now on, every two weeks

These events may be on a timeline, but they are not sequential. There is no proverbial arrow between problem → solution, it’s a meandering of messes, their consequences, and people making the best decisions they could, with the tools and knowledge they had. Healthcare technology is not just different from other industries because of the higher stakes. It’s different because it’s trying to bridge structure and imbroglio; from the clinical, over the ethical, to the emotional. This needs all hands on deck to be understood, worked over, quantized if needed, to get the right output, with the right advice, at the right time. In widening the overlap of the Venn of healthcare and tech is opportunity.

We’ll keep processing this and more, and hope that you will follow along in your inbox every other week.

— FF / Automate.clinic team

As medical AI evolves, clinician expertise and real-world insights are crucial. Help shape the future of healthcare technology by highlighting overlooked milestones and ensuring AI solutions are clinically meaningful, ethical, and improve patient outcomes. Email us to join the conversation and contribute to the next chapter in medical AI. We’ll credit your contributions for our first newsletter if you like.

Processing:

Subscribe to the future of healthcare.

AC/P#001/Draft. A rewind: 75 years of autonomous computing in healthcare

Sent:

November 30, 2025

Welcome to Processing #001, a first draft of the first email of a newsletter by the Automate.clinic team.

It’s tempting to treat every technological advance like it just happened, like magic. Starting this new venture made us want to pause and try to chronicle AI in healthcare from the start. To look at the ingredients of that magic.

When we did, it became obvious that medical breakthroughs don’t come to life clean. They’re stacked on old systems, outmoded research, and ideas that died and still somehow held them up. That only gets more tangled when you look at the histories of both clinical advancements with digital technology. Their timelines didn’t just interflow—they collided, left pieces that shape our now, some clicked, others created systems that need reform, but, hopefully, their understanding can point us to what we need to build next.

We are confident that AI in healthcare will be difficult to implement without physicians in the design process who deeply understand not only the history of AI but how it can be best deployed to the patient’s bedside.

The 75 year AI/healthcare rewind:

From “thinking machines” to predictive molecular drug design.

We didn't set out to compile a complete history, just enough to get a grasp of the events that shaped the journey of autonomous computing in care, and in the process further our perspective. The below is meant to be a kind of anti-amnesiac to remember that while AI seems new, it has been developed alongside medicine for a long time.

1950+ | Inviting A.I.

4 mathematicians, Claude Shannon, John McCarthy, Nathaniel Rochester, and Marvin Minsky, organize a weeks-long brainstorm about “thinking machines”. During planning they land on the term Artificial Intelligence as part of the title for the event that is joined by a handful of other researchers. This workshop is deemed instrumental in establishing AI as a distinct field of study, with one area for rich potential being staked on medical diagnostics. The event organizers are later considered as members of the founding fathers of AI—somehow, there were only fathers.

# aside: Then Assistant Professor of Mathematics, John McCarthy, is said to have chosen the neutral title AI because he was trying to avoid scrutiny by his peers. Among them, Norbert Wiener, said to be McCarthy’s role model, was a scientist and linguistics expert, and likely the first researcher to work on Esperanto, and surely tough to argue semantics with.

1970+ | Computer prescriptions become possible

Stanford researchers develop MYCIN, one of the first rule-based systems able to quiz doctors with a series of simple questions that it could match to ~600 rules in a clinical database to help diagnose infections and recommend antibiotics. With its diagnostics rating performing slightly better (a few percent, around 65% total acceptability) than the 5 faculty members in tests, and its ability to answer why, how, and why not something else when quizzed, it became clear that its new partitioning algorithm that ruled out entire diagnostics trees separated clinical fact-finding from more common, single-truth computing approaches.

# aside: MYCIN, the system’s name, was derived from the word suffix of many antibiotics.

1977 | Systems dialogue: initiated

Based on the early breakthroughs of SHRDLU (1968-1970) at MIT, a system able to handle natural language reasoning in a constrained domain, in 1977 ARPA funds a 5-year study called Speech Understanding Research or SUR. This speaking dialog system is developed with the ability to process spoken input in English and translate output to several European languages. While immediate applications were found in logistics, customer service, tech support, and education, progress also pointed the way for language models with enhanced conversational capabilities, including the medical field.

# aside: HARPY (one of the systems used) achieved 91% sentence accuracy using a vocabulary of 1000 words, which topped the expert’s program expectations.

1980s | Re: QMR Was: INTERNIST-1

Based on dialog system technology, INTERNIST-1 is developed at the University of Pittsburgh over 10 years to assist with internal medical diagnoses. INTERNIST-1, later known as Quick Medical Reference or QMR, stands apart because it does not adopt probabilistic models because its authors argue that medicine is more qualitative than quantitative. They go on to implement a rules-based differential diagnostic instead of using a Bayesian solution. This leads to custom logic for medical epistemology that can rule out entire decision trees, placing QMR in its own class of specialized solutions.

# aside: In not deploying Bayesian math, the QMR solution sidestepped decades of clinical resistance because of quantitative logic that would not let models easily reveal its reasoning. This is now often called “black box” AI.

1990+ | Bayesian networks resurface in clinical decision support

In a push and pull, probabilistic reasoning through Bayesian networks (BNs) gains popularity again for diagnosis and treatment planning. Its approach performs by leaps and bounds better in cardiac, cancer, and psychological conditions, but runs into issues in domains with less structured data or unclear causal pathways.

# aside: Bayesian networks struggle most with subjective and overlapping symptoms like in mental health diagnostics, and benefit most from clean, consistent data structures, that are often not easy to come by.

1995+ | Modeling cause, not just correlation

As the limitations of classic Bayesian networks became clearer, researchers like Judea Pearl (Causality: Models, Reasoning, and Inference) and Peter Lucas (work in Alzheimer’s detection) introduce formal causal graphs and do-calculus, which reduce complex interventional questions into observable probabilities, to better medical reasoning. While Pearl’s theory gains traction, use lags because healthcare rarely produces the kind of interventional data his models require; randomized, controlled, and counterfactual.

# aside: In Healthcare “just try run the experiment” often isn’t an option like it is in other industries. Ethical, logistical, and privacy limits require that causality has to be inferred, and not observed.

# aside 2: Judea Pearl explaining why he thinks his work discovered conscious AI, Youtube.

(Video production advisement: Dramatic.)

2000+ | Causal inference, applied

Following Judea Pearl’s theory, researchers begin applying do‑calculus and causal graphs to real‑world healthcare problems—especially in epidemiology and public health, where randomized trials are often impossible. These methods help estimate treatment effects and correct observational studies without relying solely on prediction. As published by the UCLA Cognitive Systems Laboratory, this marked a key shift from traditional machine learning: in medicine, it mattered whether something caused an outcome—not only if it was associated.

# aside: Causal inference gives medicine a way to ask “what if?” without experiments, but answers still depend on how much the data remembers.

2002+ | When health records turn infrastructure

Widespread adoption of EHR systems across hospitals and clinics transforms healthcare data from fragmented paper charts into machine-readable digital records. EHRs create the conditions for AI to emerge: large volumes of longitudinal, structured (and semi-structured) clinical data are now accessible. The problem is that these systems aren’t built with modeling in mind. Data is spotty across vendors, unstandardized across systems, and difficult to access for researchers.

# aside: Medical records date back as long as 1,600-3,000 BC as found by archaeologists in translations of Egyptian hieroglyphic papyri.

2011 | IBM Watson in Healthcare

After winning Jeopardy! (YouTube), IBM Watson technology was used in oncology to assist physicians make treatment decisions.

# aside: More than 10 years after Watson wins the quiz show, IBM sells off Watson Health after failing to turn its futuristic TV performance into clinical outcomes.

2015 | Deep learning upends imaging diagnostics

Deep learning algorithms start to outperform radiologists and dermatologists in detecting certain conditions from medical imaging—especially in fields like ophthalmology, dermatology, and oncology. These advances aren’t just about better models—they rely on software systems capable of ingesting, labeling, and scaling large volumes of annotated clinical images, often curated in partnership with specialists.

# aside: AI output looks like magic, but it's an amalgamation and the driver behind every query’s response is clinical data created and enriched by human physicians and experts.

2018 | AI gets cleared to diagnose, solo

The FDA approves IDx-DR, an autonomous diagnostic system for detecting diabetic retinopathy in retinal images—without a clinician involved. It’s the first time an AI is allowed to make a medical decision independently, marking a regulatory milestone that shifts AI from decision-support to maker.

# aside: For the first time a tool, IDx-DR, didn’t assist doctors; it did not even need them. This might be remembered as a milestone moment that has many physicians rethink their work / profession.

2020 | AI thrives in a crisis

AI is rapidly developed and deployed to assist with COVID-19 diagnosis, triage, treatment modeling, and vaccine discovery. Systems are often trained on fragmented, rapidly evolving datasets, many crowd-sourced from overwhelmed hospitals. While effectiveness varies, the urgency of the pandemic made powerful, but imperfect, solutions necessary in the global public health response.

# aside: In China, the startup InferVision deployed its pneumonia‑detecting algorithm to 34 hospitals (Wired), and was able to review over 32,000 cases in just a few weeks.

2022 | Large Language Models enter the exam room

GPT-4 and similar AI models demonstrate medical knowledge that can be compared to physicians across multiple-choice licensing exams. Unlike prior systems trained on structured medical data, these models are trained on text scraped from the public internet—books, journals, Wikipedia, Reddit, and so on—with surprising advancements on diagnostic and medical ability.

# aside: GPT-4 passed the boards without accruing medical school debt by copying everyone else’s work from the web (mozillafoundation).

2023 | AI designs medicine

Beyond simulation, generative AI starts producing drug candidates that enter clinical trials. Systems don’t just predict molecular behavior; they can generate new chemical structures based on target parameters, are trained on proprietary compound libraries and biomedical literature, and can often compress yearlong drug development timelines into months.

# aside: An AI-designed drug for pulmonary fibrosis reached phase II trials—just 18 months after the molecule was created. Previous drug development timelines without the tech may have taken 4-6 years to only reach phase I.

2025 | Systems make their own rounds

Advanced AI systems receive regulatory approval for autonomous patient monitoring and personalized care recommendations. Built on real-time inputs from wearables, health records, and symptom reporting, these assistants offer continuous oversight and proactive guidance—without needing a clinician in the loop.

# aside The U.S. Food and Drug Administration maintains the Artificial Intelligence‑Enabled Medical Devices list: a publicly updated database of all AI/ML‑powered devices authorized for marketing in the U.S.

From now on, every two weeks

These events may be on a timeline, but they are not sequential. There is no proverbial arrow between problem → solution, it’s a meandering of messes, their consequences, and people making the best decisions they could, with the tools and knowledge they had. Healthcare technology is not just different from other industries because of the higher stakes. It’s different because it’s trying to bridge structure and imbroglio; from the clinical, over the ethical, to the emotional. This needs all hands on deck to be understood, worked over, quantized if needed, to get the right output, with the right advice, at the right time. In widening the overlap of the Venn of healthcare and tech is opportunity.

We’ll keep processing this and more, and hope that you will follow along in your inbox every other week.

— FF / Automate.clinic team